Last updated 16 month ago

AMD makes AI statement with new hardware and roadmap, set to rival Nvidia within the facts center

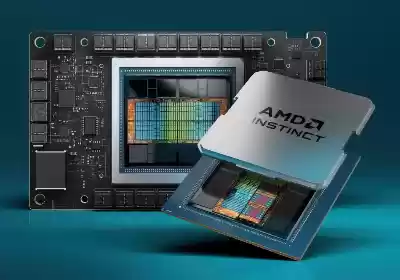

Forward-looking: As of overdue, each time a major tech company hosts an event, it almost unavoidably finally ends up discussing their approach and merchandise targeted on AI. That's just what occurred at AMD's Advancing AI event in San Jose this week, in which the semiconductor corporation made numerous vast announcements. The company unveiled the Instinct MI300 GPU AI accelerator line for statistics facilities, mentioned the increasing software program ecosystem for those merchandise, mentioned their roadmap for AI-multiplied PC silicon, and delivered different fascinating technological improvements.

In truth, there has been a relative shortage of "truely new" news, and yet you couldn't assist but stroll faraway from the occasion feeling impressed. AMD advised a strong and complete product tale, highlighted a huge (possibly even too large?) range of clients/partners, and confirmed the scrappy, aggressive ethos of the employer beneath CEO Lisa Su.

On a practical degree, I also walked away even extra sure that the agency is going to be a extreme competitor to Nvidia at the AI training and inference front, an ongoing chief in supercomputing and different excessive-performance computing (HPC) packages, and an an increasing number of capable competitor in the approaching AI PC marketplace. Not horrific for a 2-hour keynote.

Not quite, most of the occasion's attention changed into on the new Instinct MI300X, which is simply placed as a competitor to Nvidia's marketplace dominating GPU-based AI accelerators, such as their H100. While a great deal of the tech world has grow to be infatuated with the GenAI overall performance that the mixture of Nvidia's hardware and CUDA software have enabled, there is additionally a unexpectedly growing popularity that their utter dominance of the market is not healthy for the long term.

As a end result, there is been a number of stress for AMD to come up with something that's a reasonable alternative, specially because AMD is generally visible as the simplest critical competitor to Nvidia at the GPU the front.

The MI300X has so far induced substantial sighs of remedy heard 'spherical the sector as preliminary benchmarks advise that AMD completed exactly what many were hoping for. Specifically, AMD touted that they might fit the overall performance of Nvidia's H100 on AI model education and presented up to a 60% development on AI inference workloads.

In addition, AMD touted that combining 8 MI300X cards into a machine would allow the quickest generative AI laptop in the global and provide get admission to to seriously extra excessive-pace memory than the cutting-edge Nvidia opportunity. To be fair, Nvidia has already announced the GH200 (codenamed "Grace Hopper") in order to provide even better performance, however as is nearly unavoidably the case within the semiconductor world, that is certain to be a sport of performance leapfrog for decades to come back. Regardless of how humans select to accept or undertaking the benchmarks, the important thing factor here is that AMD is now ready to play the game.

Given that level of overall performance, it wasn't unexpected to peer AMD parade an extended list of companions throughout the degree. From primary cloud providers like Microsoft Azure, Oracle Cloud and Meta to employer server companions like Dell Technologies, Lenovo and SuperMicro, there was not anything but reward and pleasure from them. That's smooth to understand for the reason that these are organizations who are eager for an opportunity and extra dealer to assist them meet the dazzling demand they now have for GenAI-optimized structures.

In addition to the MI300X, AMD also mentioned the Instinct MI300A, which is the company's first APU designed for the statistics middle. The MI300A leverages the identical kind of GPU XCD (Accelerator Complex Die) elements as the MI300X, however consists of six in preference to eight and makes use of the additional die space to incorporate 8 Zen 4 CPU cores. Through using AMD's Infinity Fabric interconnect era, it offers shared and simultaneous access to high bandwidth memory (HBM) for the complete gadget.

One of the thrilling technological sidenotes from the occasion turned into that AMD introduced plans to open up the formerly proprietary Infinity Fabric to a constrained set of companions. While no info are acknowledged simply yet, it can conceivably cause some thrilling new multi-seller chiplet designs in the future.

This simultaneous CPU and GPU memory get admission to is vital for HPC-type applications and that capability is apparently one of the reasons that Lawrence Livermore National Labs chose the MI300A to be at the middle of its new El Capitan supercomputer being constructed at the side of HPE. El Capitan is anticipated to be both the quickest and one of the most strength green supercomputers in the global.

On the software program side, AMD additionally made severa bulletins around its ROCm software platform for GenAI, which has now been upgraded to version 6. As with the brand new hardware, they discussed several key partnerships that construct on previous news (with open-source model company Hugging Face and the PyTorch AI improvement platform) as well as debuting a few key new ones.

Most first-rate became that OpenAI said it became going to carry native support for AMD's modern-day hardware to version three.Zero of its Triton development platform. This will make it trivial for the many programmers and companies keen to jump at the OpenAI bandwagon to leverage AMD's modern-day – and gives them an opportunity to the Nvidia-handiest picks they've had up until now.

The final portion of AMD's bulletins covered AI PCs. Though the organisation doesn't get a lot credit score or popularity for it, they had been surely the first to comprise a committed NPU into a PC chip with ultimate year's launch of the Ryzen 7040.

The XDNA AI acceleration block it includes leverages generation that AMD received through its Xilinx buy. At this year's event, the business enterprise announced the brand new Ryzen 8040 which includes an upgraded NPU with 60% better AI performance. Interestingly, they also previewed their subsequent technology codenamed "Strix Point," which isn't always predicted until the quit of 2024.

The XDNA2 architecture it's going to include is expected to offer an outstanding 3x development versus the 7040. Given that employer nevertheless desires to sell 8040-based totally systems within the meantime, you can argue that the "teaser" of the new chip become a bit unusual. However, what I think AMD desired to do – and what I agree with they completed – in making the preview became to hammer domestic the point that that is a fantastically speedy moving marketplace and they're ready to compete.

Of course, it became also a shot across the competitive bow to each Intel and Qualcomm, each of whom will introduce NPU-accelerated PC chips over the next few months.

In addition to the hardware, AMD discussed a few AI software program advancements for the PC, consisting of the legit release of Ryzen AI 1.0 software for easing the usage of and accelerating the performance GenAI-primarily based fashions and programs on PCs. AMD additionally brought Microsoft's new Windows chief Pavan Davuluri onstage to talk approximately their paintings to offer native help for AMD's XDNA accelerators in destiny version of Windows as well as discuss the growing subject matter of hybrid AI, wherein agencies count on so that you can cut up positive kinds of AI workloads between the cloud and consumer PCs. There's tons greater to be accomplished here – and across the world of AI PCs – however it's truely going to be an interesting area to watch in 2024.

All informed, the AMD AI story turned into certainly advised with a excellent deal of enthusiasm. From an industry perspective, it is brilliant to see extra competition, as it will inevitably cause even faster trends on this thrilling new space (if that is even viable!). However, which will actually make a distinction, AMD wishes to retain executing nicely to its vision. I'm genuinely confident it is viable, but there is a variety of paintings still in advance of them.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a era consulting company that gives strategic consulting and market research offerings to the era enterprise and professional monetary network. You can observe him on Twitter @bobodtech

AMD MI300

AMD MI300X

AMD data center GPU

AMD GPU roadmap

AMD server roadmap

AMD AI chips

Nvidia roadmap

AMD EPYC roadmap

Italy seizes $836 million from Airbnb for alleged tax evasion

What just befell? Italian police have seized over €779 million (around $836 million) from Airbnb over unpaid taxes. The seizure came after prosecutors in Milan accused the home-sharing organisation of failing to pay a 2...

Last updated 17 month ago

Recent video game addiction lawsuit lists five essential online game publishers as responsible for damages

In 2019, the World Health Organization finally mentioned that video game dependancy is actual. Although they deliberately avoid calling it that, choosing the extra politically correct terminology "gaming disorder....

Last updated 17 month ago

Netflix unveils recreation lineup for 2024 consisting of Sonic Mania Plus, Game Dev Tycoon, more

Netflix is on course to close out the 12 months with 86 video games in its library, all to be had without commercials, in-app purchases, or hidden expenses. Better yet, the streaming large has even extra titles in impr...

Last updated 15 month ago

Official pictures and details of Amazon's Fallout TV display have arrived

Official photographs had been found out of what might be the following video game franchise to emerge as a damage-hit TV series. Amazon Prime Video's Fallout show arrives on April 12 next yr. Now, we've got an idea of ...

Last updated 16 month ago

Google's "Privacy Sandbox" is still ad tracking tech, the EFF warns

A warm potato: The hotly debated "Privacy Sandbox" tech is Google's strategy to the standard hate reserved for 1/3-birthday party cookies and behavioral marketing. The Sandbox brings several new technologies i...

Last updated 18 month ago

Microsoft's $69 billion acquisition of Activision Blizzard is sort of complete after UK watchdog gives provisional approval

What simply befell? The long-strolling saga this is Microsoft's attempted $69 billion acquisition of Activision Blizzard appears to be almost over after the UK's competition watchdog provisionally accepted the employer'...

Last updated 18 month ago