Last updated 16 month ago

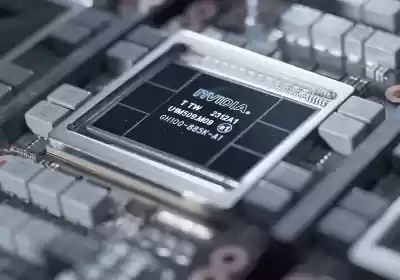

Nvidia launches H200 AI superchip: the first to be paired with 141GB of current HBM3e memory

Why it matters: The generative AI race shows no signs and symptoms of slowing down, and Nvidia is trying to completely capitalize on it with the creation of a brand new AI superchip, the H200 Tensor Core GPU. The largest development while in comparison to its predecessor is the usage of HBM3e memory, which allows for greater density and better reminiscence bandwidth, each important factors in enhancing the speed of services like ChatGPT and Google Bard.

Nvidia this week added a brand new monster processing unit for AI workloads, the HGX H200. As the call shows, the new chip is the successor to the wildly popular H100 Tensor Core GPU that debuted in 2022 whilst the generative AI hype educate started out picking up speed.

Team Green introduced the brand new platform throughout the Supercomputing 2023 conference in Denver, Colorado. Based on the Hopper architecture, the H200 is expected to supply almost double the inference velocity of the H100 on Llama 2, that's a large language model (LLM) with 70 billion parameters. The H200 also offers around 1.6 times the inference velocity when using the GPT-3 version, which has a hundred seventy five billion parameters.

Some of those overall performance enhancements came from the architectural refinements, but Nvidia says it is also completed full-size optimization work on the software program front. This is meditated in the current release of open-supply software program libraries like TensorRT-LLM which could deliver up to 8 times greater overall performance and up to 6 instances decrease strength consumption when the usage of the today's LLMs for generative AI.

Another spotlight of the H200 platform is that it's the first to make use of fester spec, HBM3e memory. The new Tensor Core GPU's total reminiscence bandwidth is a whopping four.Eight terabytes in step with 2nd, a good bit faster than the 3.35 terabytes in step with 2d carried out by using the H100's reminiscence subsystem. The total memory potential has also increased from 80 GB at the H100 to 141 GB at the H200 platform.

Nvidia says the H200 is designed to be compatible with the equal systems that assist the H100 GPU. That stated, the H200 will be available in numerous form elements which includes HGX H200 server boards with 4 or eight-manner configurations or as a GH200 Grace Hopper Superchip where it is going to be paired with a effective 72-center Arm-based CPU at the same board. The GH200 will allow for up to 1.1 terabytes of combination high-bandwidth memory and 32 petaflops of FP8 overall performance for deep-learning applications.

Just just like the H100 GPU, the brand new Hopper superchip can be in high demand and command an eye fixed-watering fee. A single H100 sells for an anticipated $25,000 to $40,000 depending on order volume, and many groups within the AI space are buying them via the thousands. This is forcing smaller businesses to companion up simply to get limited get entry to to Nvidia's AI GPUs, and lead times do not seem to be getting any shorter as time goes on.

Speaking of lead instances, Nvidia is creating a massive earnings on every H100 sold, so it is even shifted some of the production from the RTX forty-series closer to making extra Hopper GPUs. Nvidia's Kristin Uchiyama says supply might not be an difficulty because the organization is continuously working on including greater manufacturing potential, but declined to provide greater information on the matter.

One element is for certain – Team Green is tons greater inquisitive about promoting AI-targeted GPUs, as income of Hopper chips make up an more and more big bite of its sales. It's even going to top notch lengths to expand and manufacture reduce-down variations of its A100 and H100 chips just to avoid US export controls and deliver them to Chinese tech giants. This makes it difficult to get too excited about the upcoming RTX 4000 Super images playing cards, as availability may be a massive contributing element closer to their retail price.

Microsoft Azure, Google Cloud, Amazon Web Services, and Oracle Cloud Infrastructure will be the first cloud providers to provide access to H200-based totally times starting in Q2 2024.

NVIDIA super chip

NVIDIA H200 release date

NVIDIA HBM3

GH200 memory bandwidth

HPCwire

Nvidia AI chip

Nvidia new chip

FlexiSpot C7 Ergonomic Office Chair Review

Expensive gaming chairs with their oversized, car seat-like looks aren't for each person. And even as enterprise leaders like the tremendous Secret Lab Titan Evo and Noblechairs Legend do include built-in adjustable lum...

Last updated 16 month ago

Study appears to debunk link among net use and intellectual health

Why it matters: It's long been claimed that using the internet, social media apps, and smartphones has a negative impact on our mental health. However, a brand new study that used information from over 2 million human b...

Last updated 15 month ago

AMD FSR 3 Frame Generation Analyzed: DLSS 3 Contender? Not So Fast

AMD's FSR three technology made a surprise debut earlier this month in two games: Forspoken and Immortals of Aveum, and we're geared up to present you an early appearance of the technology. There's plenty to cover in th...

Last updated 17 month ago

VeraCrypt open-supply disk encryption utility gets massive replace

VeraCrypt adds better protection to the algorithms used for gadget and walls encryption making it resistant to new developments in brute-force assaults. VeraCrypt also solves many vulnerabilities and protection problems...

Last updated 17 month ago

MIT device may want to unfastened people with kind 1 diabetes from insulin injections and pumps

Scientists have spent decades attempting to find a cure for kind 1 diabetes, an autoimmune circumstance with no regarded reason. While reprogramming a human body so it stops attacking the pancreatic islet cells that ma...

Last updated 17 month ago

Tom's again! Upcoming documentary to revisit MySpace's glory days

They say Facebook is the social media platform for antique people, but if you really want to expose your age, there's not anything like nostalgically reminiscing about the honour days of MySpace. Those lucky enough to ...

Last updated 17 month ago