Last updated 16 month ago

TensorRT-LLM for Windows quickens generative AI performance on GeForce RTX GPUs

A hot potato: Nvidia has up to now ruled the AI accelerator business in the server and statistics middle marketplace. Now, the agency is enhancing its software program services to deliver an advanced AI enjoy to customers of GeForce and other RTX GPUs in computer and laptop systems.

Nvidia will soon release TensorRT-LLM, a new open-source library designed to boost up generative AI algorithms on GeForce RTX and professional RTX GPUs. The latest photos chips from the Santa Clara corporation encompass devoted AI processors called Tensor Cores, which might be now offering local AI hardware acceleration to more than one hundred million Windows PCs and workstations.

On an RTX-geared up system, TensorRT-LLM can reputedly supply up to 4x faster inference performance for the modern and maximum advanced AI huge language models (LLM) like Llama 2 and Code Llama. While TensorRT was to begin with released for statistics middle packages, it is now to be had for Windows PCs geared up with powerful RTX pics chips.

Modern LLMs drive productivity and are central to AI software, as mentioned by means of Nvidia. Thanks to TensorRT-LLM (and an RTX GPU), LLMs can operate extra efficaciously, resulting in a appreciably improved person experience. Chatbots and code assistants can produce a couple of specific automobile-whole outcomes simultaneously, permitting customers to select the satisfactory response from the output.

The new open-source library is also useful when integrating an LLM set of rules with different technologies, as stated by way of Nvidia. This is especially useful in retrieval-augmented generation (RAG) eventualities in which an LLM is blended with a vector library or database. RAG answers allow an LLM to generate responses based totally on particular datasets (together with user emails or website articles), allowing for greater focused and applicable answers.

Nvidia has announced that TensorRT-LLM will quickly be available for down load via the Nvidia Developer website. The organization already offers optimized TensorRT models and a RAG demo with GeForce information on ngc.Nvidia.Com and GitHub.

While TensorRT is commonly designed for generative AI professionals and developers, Nvidia is also working on extra AI-based enhancements for traditional GeForce RTX clients. TensorRT can now boost up super photograph generation the use of Stable Diffusion, thanks to features like layer fusion, precision calibration, and kernel car-tuning.

In addition to this, Tensor Cores within RTX GPUs are being applied to beautify the quality of low-exceptional internet video streams. RTX Video Super Resolution model 1.Five, blanketed inside the trendy launch of GeForce Graphics Drivers (version 545.Eighty four), improves video quality and decreases artifacts in content performed at native resolution, way to superior "AI pixel processing" technology.

NVIDIA LLM

Faster Transformer vs TensorRT

TensorRT Benchmarks

TensorRT C++ example github

NVIDIA Software Developer

Nvidia Myelin compiler

TensorRT Jetson Nano

NVIDIA AI tools

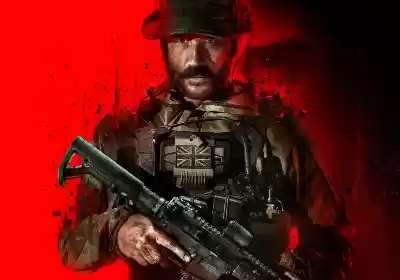

Activision justifies Call of Duty: Modern Warfare three's massive 200GB deploy size

What simply took place? Call of Duty video games have long taken up tremendous chunks of players' garage area, and CoD: Modern Warfare three isn't any one-of-a-kind. Those who preordered the game ahead of its November 1...

Last updated 16 month ago

Apple halts iOS 18 and macOS 15 characteristic development over numerous early insects

In a nutshell: Apple is usually busy running as a minimum a 12 months earlier on its operating structures. Feature development for iOS 18 and macOS 15 are properly underway. At least, they were till now. Cupertino has h...

Last updated 16 month ago

Intel to unveil Meteor Lake Core Ultra and 5th-Gen Xeon CPUs at AI occasion on December 14

Intel has showed it's going to unveil its Core Ultra CPUs, aka the Meteor Lake chips, alongside the 5th-gen Xeon Scalable processors (Emerald Rapids) at an "AI Everywhere" event on December 14 at 10am ET/7am ...

Last updated 16 month ago

Google's pinnacle-trending searches of 2023 encompass Hogwarts Legacy, ChatGPT, and a query approximately Romans

Nothing alerts the upcoming cease of a yr pretty like groups releasing yr-in-review lists. For Google, it's time for the tech giant's Trending in 2023 function, revealing the top-trending search terms over the past twe...

Last updated 15 month ago

Homeworld three calls for 12GB of RAM but most effective 40GB of garage space

Strategy video games aren't usually recognised to have the most traumatic graphics and machine necessities, however the specifications for Homeworld three advocate it is able to be a tremendous exception. Regardless of...

Last updated 15 month ago

Intel knew approximately the Downfall CPU vulnerability but did nothing for 5 years, a brand new magnificence action claims

Downfall is the maximum current of a protracted series of protection vulnerabilities discovered in Intel processors in the course of the past few years. According to a new elegance movement, Chipzilla was well aware of...

Last updated 16 month ago